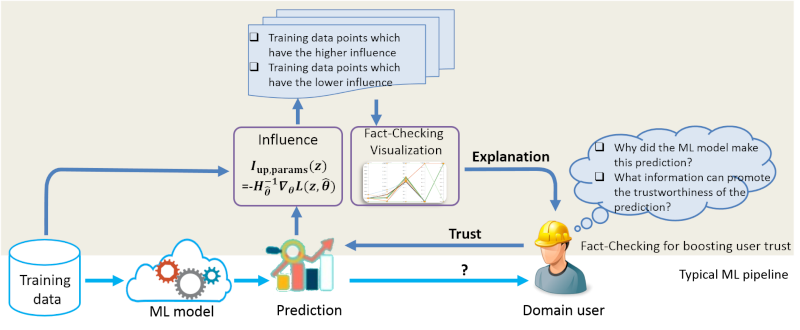

Artificial Intelligence (AI) faces prolonged challenges with low user acceptance of delivered solutions as well as seeing system misuse, disuse, or even failure. These fundamental challenges can be attributed to the nature of the "black box" of machine learning methods for users, they simply provide source data to an AI system, and after selecting some menu options, the system displays colorful viewgraphs and/or recommendations as output. It is neither clear nor well understood why ML algorithms made this prediction, or how trustworthy this output or decision based on the prediction was. Our research aims to investigate explaination approaches for ML, and understand what are human responses to different explainations in order to provide trustworthy AI solutions.